Remember the California Gold Rush? 300,000 people descended into California in 1840s to 1850s to mine gold with only few actually ending up getting rich from the gold. When majority of the population was focused on finding gold, few people like Samuel Brannan ended up being millionaire from selling supplies to the miners. Want to hear another success out of this gold rush? Levi Strauss, the famed denim jeans producer. Just like the Gold Rush, California is today seeing another gold rush but this time for artificial intelligence or AI. Google, Amazon, Microsoft, OpenAI, you name any big cloud service provider or multi billion dollar startups like Anthrophic. All of them are betting big on large language models and are spending billions of dollars each quarter to carve out a nascent but very promising and scalable market in AI. All of them are focusing on one thing and that is how to build bigger and more promising AI models but one company is squarely focused on helping these companies achieve this by being a shovel to their gold dreams is Nvidia. This article will give you both a storyline and a view of the numbers of this multi trillion dollar giant in the making to give you an idea about the scale and its achievements and why investors are bullish on this one chipmaker. If you are not interested in initial history of the company, you can straight go to the recent financial performance at the bottom and prospects of the company.

P.S. – This article purely meant for educational purposes. Do not consider any conclusions from this blog as buy or sell decisions. Please do your own independent research for investment purposes. This blog is not responsible for any loss in investment by the reader. As a disclaimer, the writer of this blog doesn’t hold any investment in the given stock.

The beginning of the new computing era

Nvidia has been in the news for eclipsing the market capitalisation of companies like Apple and Microsoft. An outcome almost no one would have imagined or thought was thinkable until few years back. The AI story may have unfolded now but it was decades in the making which is what makes it even more worthy to talk about. I have always been a tech enthusiast. I have known Nvidia since a long time but could not have imagined the scale it will achieve. It was only later I realised its potential, probably because I am not close enough to developments of AI and partly ignorance since Indian markets offer such lucrative high growth stories for investing.

Coming to Nvidia, it was started by Jensen Huang, Chris Malachowsky and Curtis Priem in April of 1993. Prior to starting Nvidia, Jensen worked at LSI Logic and Advanced Micro Devices (AMD), which is in fact the arch rival to Nvidia today. Nvidia was born out of a need to solve 3D computer graphics for the gaming and multimedia market. Imagine there were 90 startups which came up after Nvidia started, all trying to solve this problem thus commoditising the market at the onset. Only key differentiator was performance or efficiency of the chips and time to market. These conditions still stand today for the remaining players. At one point, all these startups were ahead of Nvidia and the company was close to bankruptcy. All of this changed in 1999 when Nvidia revolutionised the PC gaming market with the invention of graphics processing unit. There are some subtle differences between what Nvidia developed in 1999 vs initial development of graphics card before 1999. You can look up the history to understand more. How exactly did GPU change the landscape? The CPU in a PC is responsible for diverse variety of tasks and cannot handle large amount of arithmetic tasks simultaneously. It has limited number of cores or essentially limited amount of workstations with diverse skills to allocate for running applications. GPU accelerates this by offering thousands of cores and shared memory for the application to run on. Imagine thousands of workstations with one single focus to solve mathematical problems fast. It has been designed to optimise the performance of compute intensive applications like games or generative AI. Both these applications require simultaneous or parallel compute capabilities to render images or graphics or ability to reply on ChatGPT fast enough and in a very energy efficient way. You can say CPU can handle diverse variety of tasks while compromising on efficiency but GPUs are specifically meant to solve this parallel compute ability. CPU can handle the overall application but uses GPU’s capability to perform functions extremely fast and at acceptable time limits. It saves power and delivers the task much efficiently.

Another foundation of AI development: CUDA

Nvidia’s AI dominance doesn’t just come from GPUs. AMD and Intel both offer GPUs. There is not much to differentiate here. Differentiation comes from the software stack on top of Nvidia GPUs. This software stack is known as CUDA or Compute Unified Device Architecture and is proprietary to Nvidia GPUs. This software allowed researchers and developers direct access to Nvidia GPU’s parallel compute capability which the competition didn’t develop at the time. Nvidia brought this ability to market in 2006. This was a conscious decision taken by Jensen in early 2000s after watching the industry. Till date, there aren’t strong alternatives to this entire development ecosystem. To make sense of this, CUDA offers low level programming without which acceleration isn’t possible. Intel and AMD both offer GPUs but don’t offer any such product and without this optimisation it leads to significant overheads. Nvidia has optimised it very well. Nobody could have imagined it then how this move would have changed the trajectory of companies involved in the same industry. This software allowed GPUs focus to expand from gaming and content to general purpose accelerated computing. Tasks which could take weeks or months on CPUs could be done in GPUs in days. Imagine the cost and time savings. Earlier CUDA was focused on making GPUs as scientific hardware with focus changing to neural networks in 2015. Even he couldn’t have imagined the scale it will achieve since scientific computing was a very small market. Competition has developed their own software stacks to compete with Nvidia but they were very late to market and the lead which Nvidia has established over the last 18 years is paying off today.

Call it luck?

In 2012, far from Wall Street or mainstream tech. Nvidia’s GPUs were used to win a research level competition in image recognition. The trained algorithm, known as AlexNet, showed marked improvement in performance in image recognition and it became the pivotal movement in the history of deep learning. After this, doors to deeper AI research opened and other problems seemed solvable. It was the approach to solving the AI related problem which changed the fate of technology industry and Nvidia. They used two Nvidia GPUs to perform the computationally intensive task which previously seemed impossible. Nvidia’s efforts to focus on opening up itself aside from gaming to general purpose accelerated computing for the scientific field while others were too focused on billion dollar opportunities in gaming world led it to secure itself as the technology partner towards the dawn of deep learning. Since then, AI developers are focused on developing for Nvidia GPUs and the ecosystem continues to grow. Today, CUDA composes of entire development ecosystem which simply make it the top choice. There is simply no alternative. If you want to understand more, I have given links to two articles on the internet which will help you dive a bit deeper into Nvidia’s moat. (https://www.nytimes.com/2023/08/21/technology/nvidia-ai-chips-gpu.html, https://www.turingpost.com/p/cvhistory6)

AI today

AI today essentially is focused on deep learning. It is a subset of machine learning and AI where the algorithms focus on learning from raw data just like humans, look for patterns and try to mimic a human brain in software. This is called a neural network and today this forms the basis of ChatGPT, self driving cars and much more. From AI development of view as an investor, you can divide the market into two halves. One is training and the other is inference. Training is a phase where the model learns from the training data and tweaks its parameters and weights to minimise the errors in output. Inference is when the trained AI model is deployed for use. You need chipsets for both the categories. Training is more computationally intensive than inference but the scale of inference can be huge due to repeated usage of the model. Imagine ChatGPT processing millions of requests each day using inference.

Nvidia today

Nvidia today holds a dominant market share of AI hardware market. Estimates range from a 75% to 90% market share in AI chip market for Nvidia. It is a fabless chip company mainly focusing on chip design and development and outsources production to TSMC. The company has a clear moat in the market with top tech companies choosing Nvidia for their needs and its products simply being better than competition. It seems from the market share data that there is a clear advantage Nvidia has above its peers and is expected to maintain the same. ‘For every $1 spent on an Nvidia GPU chip there is an $8 to $10 multiplier across the tech sector,’ according to an August report by investment firm Wedbush. Companies are spending ten of billions of dollars every quarter to ramp up AI hardware in their data centres and this is being currently led by world’s biggest tech companies, in particular, Google, Microsoft, Amazon and Meta. You can see the capex being undertaken by these companies to build data centres as they provide AI solutions to their clients as cloud service providers and for captive consumption as well. Apple is set to bring AI features to its millions of iPhone users this year with Siri getting integrated with OpenAI’s ChatGPT. (I have put a link below for readers to grasp the scale of this trend –https://www.hindustantimes.com/business/heres-how-much-big-tech-giants-like-google-microsoft-apple-and-amazon-spent-on-ai-101722407832742.html). The trend of AI capex is broadening with countries and companies from other sectors also now jumping on the bandwagon. Traditional IT service companies are also now pitching enterprise AI solutions to clients as businesses are forced to upgrade their technology infrastructure to match the needs of tomorrow. About a trillion dollar worth of infrastructure needs upgrade and all of them will be built with GPUs to accelerate computing. Currently as per Nvidia world is transitioning from general purpose computing to accelerated computing and human software developers will be replaced by AI lead code development. Think about the scale here? India employs millions of software engineers from which a subset or possibly even more are on track to become obsolete and this development is through AI-led data centre boom. Future AI models are going be even more computationally intensive with next generation models being 20-40x more intensive than existing ones.

Concentrated revenue but big cash flow with clear moat

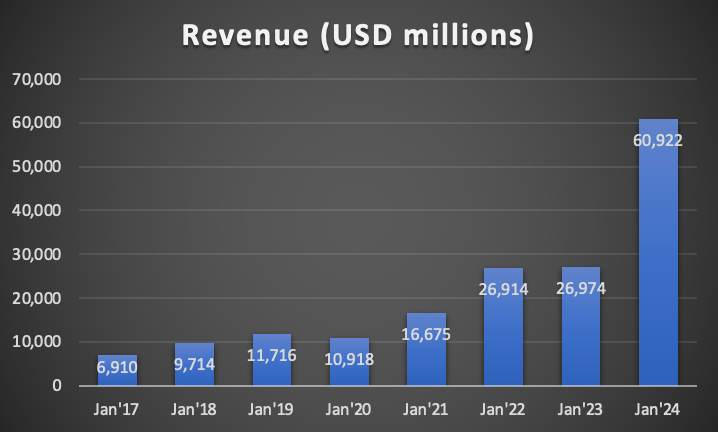

Nvidia’s revenue has blown up post 2016, it was a high growth company before the AI race but the launch of ChatGPT in November 2022 kicked off an arms race in AI. Lot of companies and countries were caught unaware with the release of ChatGPT and its capability. Just for the measure Nvidia’s revenue went from close to $7 billion dollars in fiscal year 2017 to almost $61 billion dollars in fiscal year of 2024. Its turnover grew by 8.8x in 8 years! One has to remember their GPUs aren’t cheap. A Nvidia H100 can cost more than $40,000 a piece. Tesla is reportedly set to increase its 35,000 H100 GPUs by 50,000 to 85,000 GPUs by the end of this year. You can see how quickly these numbers can add up when global capex takes off.

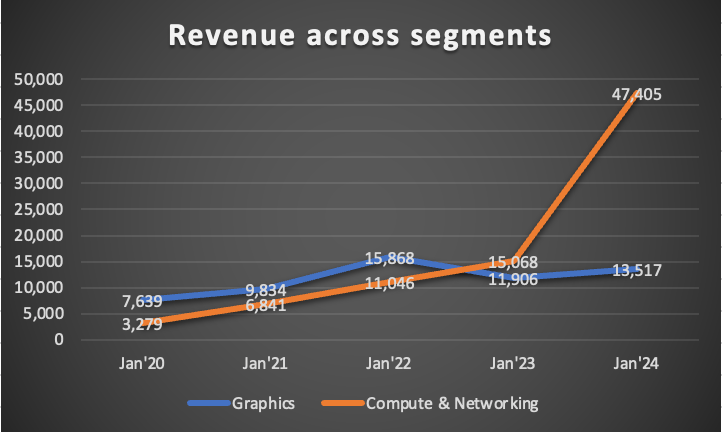

Nvidia has two reportable segments with Graphics and Compute & Networking being the other. Graphics segment is mainly concerned with gaming, VR, metaverse and visual related revenue. Compute & Networking is concerned with data centers and networking platforms. From enterprise cloud to automotive AI related revenue comes under this segment. Nvidia by nature has been cyclical company due to the nature of its end customers. Even the current capex boom is suggestive of the same but the difference lies in the longevity and scale of the data center capex boom. Demand for GPUs is only getting stronger.

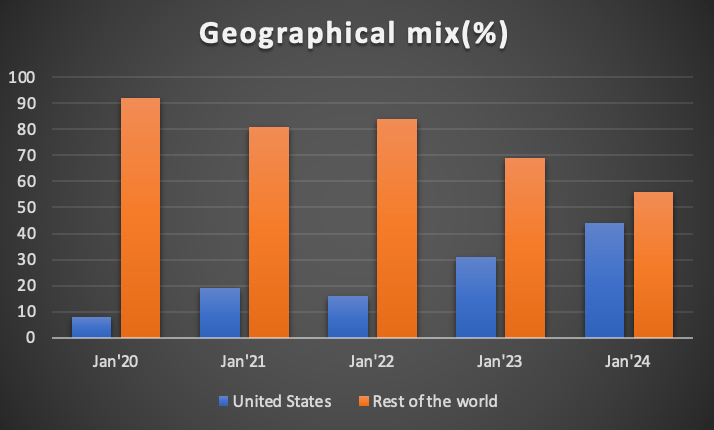

Nvidia’s geographical concentration has grown over the years. With United States forming an increasing part of the overall revenue mix. This is indicative of the capex being undertaken by Magnificent Seven in the US. Interestingly, capex seems to have taken off in the rest of the world as well.

Customer concentration risk has grown for Nvidia. As per their regulatory disclosures, this risk was very minimal until few years back when no single customer had an outsized impact on revenue which has materially changed over the last two years.

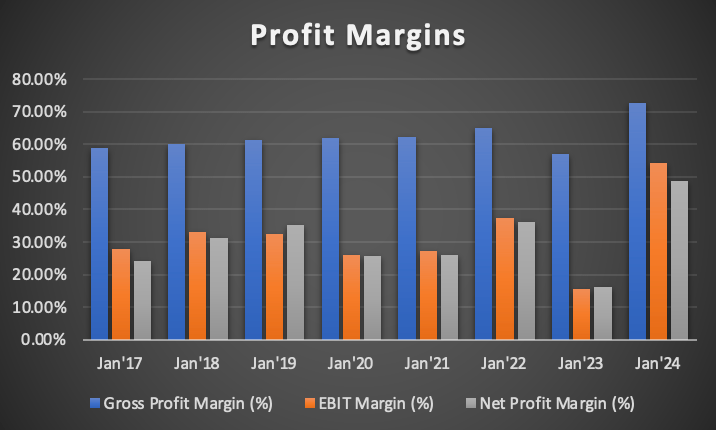

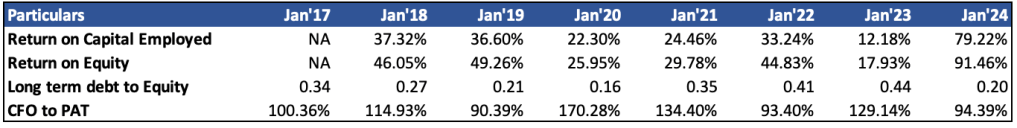

Let’s talk about profitability and cash flow. Revenue growth of tens of billions alone can’t pull the company to big leagues of Apple and Microsoft. Nvidia has the world’s best set of margins any business can have. It has a gross profit margin of more than 72% with operating margins of 54% and net profit margins of close to 50%. This wasn’t the case in 2000s but is definitely indicative of the strategy paying off and the competitive moat company now surrounds itself with. Company has clearly seen a sustained structural expansion in gross margins and operating leverage benefits playing out over last few years which is highly indicative of the moat the company has built. Have you ever seen a hardware company command these high margins at such a huge scale? Gross margins have improved from 58% to 72% by FY24. In FY23, company had a failed acquisition with Arm which resulted in acquisition cost of $1.35 billion and a ramp up in operating expenses due to research & development costs. Just for knowledge, R&D makes up 76% of the operating cost base. Total operating costs sit at 18% of the revenue as of FY24. Total operating expenses have shrank from 30% of revenue in FY17 to 18% in FY24. Margins though have been volatile in between.

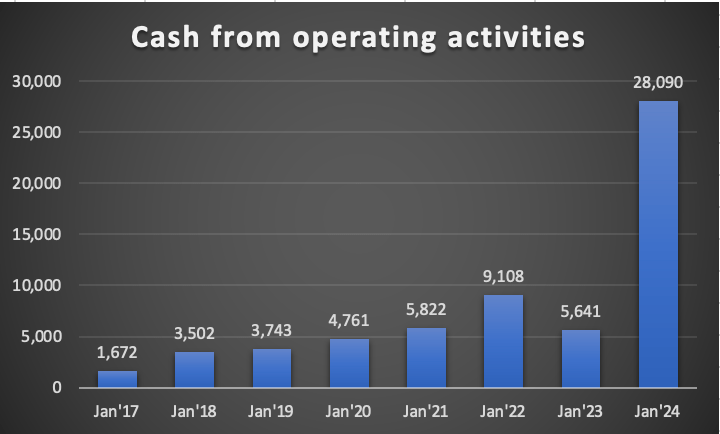

Cash flows have seen a fast rise commensurate with the growth in revenue and profitability. Nvidia has not seen much improvement in its working capital cycle with accounts payable continuing to remain flat at 4% and accounts receivables seen big increase from 8.3% to 15.2% from FY17 to FY24. Inventories have remained flat at 8%. Nvidia has returned significant money to shareholders by buying back shares and giving dividends with majority of the money being returned through buybacks. In last 2 years, Nvidia has spent close to $20 billion dollars returning money to shareholders. It has further announced additional plans to buyback $50 billion dollars worth of shares.

Prospects – risk or reward?

Nvidia is a one stop shop for data center needs of big tech, enterprises and accelerated computing needs. It offers entire stack of data center from GPUs, frameworks, software to networking needs inside the data center. Nvidia undertook acquisition of Mellanox for $7 billion dollars to strengthen its data center offering. Looking from an investing perspective, risk and reward need to be discussed. Nvidia has a lot going for it and there’s more to come.

Opportunities for Nvidia

Nvidia is positioned in a market where competitors are entering to chip away or grab some part of this revenue to challenge Nvidia. One has to think about the entire ecosystem which needs to be built around hardware and software, along with the developers to develop such a strong ecosystem and go to choice for data centre customers. Startups and companies from Amazon, Google, Microsoft, Apple and others are all trying to develop custom AI based chips for their data centres to reduce their costs. Remember the gross margins?. Nvidia still continues to lead and will maintain its lead for years to come. Google currently offers its own chips through only Google Cloud. Amazon has developed its own training and inference chips for AI models for customers. Nvidia is also much stronger at training than inference part of the business. You can see some competition prop up to specifically target inference based chips as Nvidia is not as good there. Competitors like Groq and Cerebras are building inference compute solutions which Nvidia isn’t doing. Reward overall continues to remain favourable for Nvidia. There will be more opportunities which will unlock over next few years with software engineers who are squarely focused on low end coding to be replaced by AI. Self-driving will require data centres. Enterprise AI is just getting started. The investment case right now is purely based on optimism the world will undergo a major shift as AI penetrates deeper into workplace and consumer economy. Google’s search engine is under pressure as well from LLM based search engines. OpenAI is vying for this market. SearchGPT was launched as a prototype in July, 2024. In consumer tech, smartphones have been the first products to receive new AI related features. AI features currently don’t offer much value on smartphones based on early reviews. It will be over time as new use cases develop the AI may become more central to entire smartphone ecosystem. Google’s latest Pixel 9 lineup offers strong AI features like Gemini which come with real time image generation capability and voice based AI assistant. All are paid features. Risk is also on the side that development cycles are shortening for these data centre chips. Nvidia is reducing the time to market for these chips and developing them faster than before which brings newer sets of risk.

Financials and management commentary

Nvidia is a very high quality company with strong financials and strong cash flow. The problem lies in the industry it operates, mainly gaming, data centre and cryptocurrency mining. Gaming and cryptocurrency markets both have shown to be historically volatile in nature from revenue point of view. If one has seen the graph of graphics revenue segment, the cyclicality is clearly visible. Compute & networking is showing one side drastic increase which is purely driven by capex being undertaken by big tech and countries. How long will this capex last is highly dependent on how fast new AI models make it to market and how good is their adoption. Are consumers and enterprises willing to pay extra to bring these AI features into their workflows? Short term capex seems to be visibly strong as can be gauged from the growth rates and management commentary. Nvidia is currently rolling out H200 chips and is producing Blackwell platform which is expected to ship later this year. The chip has 208 billion transistors, 128 billion more than its Hopper predecessor, is capable of five times the AI performance, and can help reduce AI inference costs and energy consumption by up to 25 times, according to Nvidia. Naturally, whoever gets them first gets a cost advantage. They expect to ship several billion dollars worth of Blackwell in Q4 of FY25. Nvidia also expects gross margins to expand to 75%, slightly higher than 72.7% in FY24. Operating margins will expand significantly due to better gross margins and operating leverage benefits. Nvidia has already booked almost entire FY24’s revenue in H1FY25. The company is on track for a revenue of $32 billion dollars in Q3FY25 and should close above the same figure in Q4FY25, which means revenue will end up doubling year on year to $120 billion dollars plus.

Valuation & Return ratios

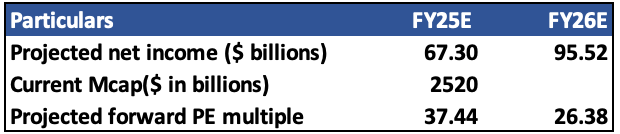

I have estimated a revenue of $186 billion dollars for Nvidia in FY26, giving them a growth rate of 50% due to the continued capex from big tech, added opportunity of countries doing AI capex and Blackwell ramping up through 2025. I cannot stress enough that company may experience cyclicality as investors and big tech may give a pause to AI capex due to slower returns from this capex than anticipated. Company also has seen reduction in China business due to trade restrictions with revenue falling to single digits as compared to double digits before. These trade restrictions could continue to rise due to US-China trade war and US attempts to stifle China’s AI progress. Profitability and cash generation will favour Nvidia as operating leverage benefits further come in and company gets a huge boost in revenue. With bottom line expanding from 49% in FY24 to 54% in FY25 and expected to remain flat to slightly decline over FY26. Blackwell is reportedly priced slightly higher side than Hopper architecture which should positively support margins or give an upside surprise.

The current bullishness in the stock and with the anticipated Federal Reserve rate cut, valuations for Nvidia could sustain. The stock has faced a decline due to Department of Justice antitrust investigation. Although unfair trade practices have been claimed by DOJ, there aren’t any competitive alternatives to Nvidia and the company is operating like a monopoly at the moment. Investors will be paid to track the management commentaries of big tech and enterprise IT companies. Even if Nvidia fails to deliver on revenue growth projections, the company has a big stock buyback plan approved with upto $50 billion dollars worth of stock which will be bought back. Post this AI capex, Nvidia could see growth falling to lower double digits or even lower, depending on AI investment payoff for end users. Another downside risk could be the huge margins company is reporting. Demand is currently strong which maybe strongly playing a role in the margin profile of the company. Although I don’t see a fall in gross margins for now, opex may get hit or gross margins may take a hit due to production issues with new Blackwell processors or demand simply fails to pan out the way Nvidia estimated. Historically GPUs have seen price fluctuations and overall semiconductor industry is prone to pricing erosion at times. Technological change could be big risk for Nvidia. Open source GPU programming might kill the competitive moat of Nvidia . If open source GPU programming indeed becomes a viable alternative, Nvidia could see fast erosion in margins and market share. Nvidia has strong cash flows with a strong moat and very high quality business well placed to capture AI wave for the foreseeable future. Any dips in stock valuations could present a nice opportunity for investors to ride on the growth wave with a big scale company like Nvidia.

-Shivang Agrawal on WordPress

Leave a comment